How Does AI Generate Images? Guide to 2026 Techniques

Imagine describing a breathtaking scene and watching it instantly appear as a unique, vivid image. In 2026, AI art generators are turning this vision into reality for creators, marketers, and everyday users.

But how does ai generate images from just a few words or ideas? This article unpacks the science and technology behind the latest AI art tools, revealing the models and processes that make this creative magic possible.

Discover the core techniques, step-by-step mechanics, and real-world uses that are shaping the future of digital art. Get ready to see how AI is revolutionizing the way we turn imagination into imagery.

Direct Answer: How does AI Generate Images?

AI generates images by learning patterns from vast datasets of existing images and text. Using models like diffusion or neural networks, it starts with random noise and gradually refines it step by step, guided by a text prompt, until the noise transforms into a clear, coherent image that matches the description.

What Is AI Image Generation ?

AI image generation is the process of using artificial intelligence to create images from text descriptions, data, or existing visuals. These models learn patterns from large image datasets and can generate realistic or artistic images automatically, without manual drawing or photography.

How AI Understands and Processes Visual Data

To answer how does ai generate images, we must look at artificial neural networks (ANNs), which mimic how the human brain processes information. These AI systems are trained on vast datasets containing millions of image-text pairs, learning to recognize patterns, styles, and objects across diverse contexts.

During training, the AI analyzes countless examples, gradually understanding visual features like color, shape, and composition. This enables generative models to create new images by sampling from the patterns and distributions they have learned. For instance, platforms such as DALL-E, Midjourney, and Stable Diffusion can craft realistic or imaginative visuals from simple prompts.

Quality image generation depends on data diversity and precise annotation. Models exposed to a wide array of images produce more versatile results. However, these systems also inherit limitations and biases from their training data, which can affect output accuracy.

AI art generators blend multiple styles, concepts, and attributes to create images that match user intent. If you are curious to see this process in action, platforms like the AI Image Generator Tool offer hands-on experience, letting users experiment with different prompts and styles.

Natural Language Processing (NLP) and Prompt Engineering

Understanding how does ai generate images also involves examining how AI interprets user instructions. Natural Language Processing (NLP) models convert text prompts into numerical embeddings, enabling the system to grasp the meaning and context behind each request.

The specificity of a prompt plays a significant role in the final image. A single word change can shift the entire outcome, highlighting the importance of precise language. Advances in prompt engineering, such as reusable templates and prompt libraries, help users achieve more accurate and creative results.

Modern AI image generators rely on seamless integration between NLP models and visual generation systems. This pipeline ensures that the intended concept is faithfully translated into an image. Emerging trends, like multimodal models, are pushing boundaries by allowing AI to blend text, images, and even audio for richer creative outputs.

As the field evolves, understanding how does ai generate images prepares users to make the most of these innovative tools, driving creativity across industries.

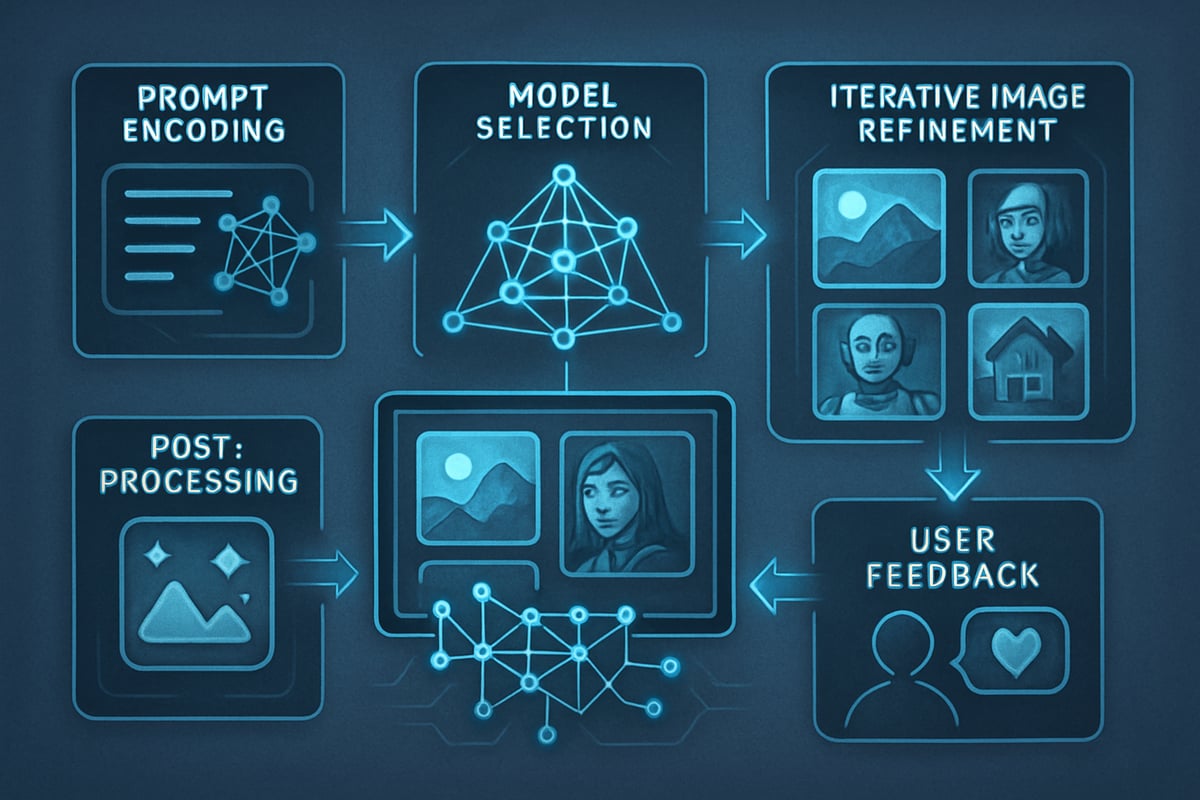

Step-by-Step: How AI Generates Images in 2026

AI image generation in 2026 is a multi-stage process that blends cutting-edge neural networks, language understanding, and user interaction. To answer "how does ai generate images," let's walk through each step, from your initial idea to the final masterpiece.

Step 1: Encoding the User Prompt

The process starts when a user submits a prompt, such as a descriptive phrase or reference image. Natural Language Processing (NLP) models analyze the prompt, extracting meaning, intent, and context. This information is transformed into a vector embedding—a rich, multi-dimensional numerical representation.

For example, the prompt "A futuristic city at sunset in watercolor style" is broken down into key concepts like setting, time of day, and artistic style. The AI uses this embedding as a blueprint, answering the question of how does ai generate images that match user intent.

Prompt encoding is crucial, as even a small change in wording can produce a dramatically different image. Specificity and clarity in prompts lead to more accurate results.

Step 2: Selecting the Generation Model

Once the prompt is encoded, the AI system determines which generative model best fits the desired output. Choices include Generative Adversarial Networks (GANs), Diffusion Models, Variational Autoencoders (VAEs), or Neural Style Transfer (NST). Each model excels in different areas, such as realism, creative style, or speed.

Hybrid approaches are increasingly common, combining strengths from multiple models. For example, GANs may handle realistic elements while Diffusion Models refine artistic details. If the prompt is for a logo, platforms like the AI-Powered Logo Generator use tailored models for crisp, scalable graphics.

This model selection is a key part of how does ai generate images that are both context-aware and visually compelling.

Step 3: Image Generation and Iterative Refinement

With the model selected, the AI generates an initial image—often starting from random noise, a template, or a reference. The model then iteratively refines the image. Each cycle reduces visual noise, sharpens features, and aligns the result with the encoded prompt.

Optimization techniques, such as loss functions, balance content accuracy, style fidelity, and realism. For instance, diffusion models gradually transform a noisy canvas into a detailed scene. This step is central to how does ai generate images with increasing clarity and sophistication.

This process enables AI to create anything from lifelike portraits to imaginative landscapes, all guided by the user's prompt.

Step 4: Post-Processing and Output Delivery

After refinement, the AI applies finishing touches. This may include color correction, upscaling for higher resolution, and removal of any artifacts. The final image is delivered to the user, often with options to make further edits or generate multiple variants for selection.

For example, a user generating a logo can receive several options, each uniquely styled yet faithful to the original brief. This stage ensures that how does ai generate images results in polished, professional-quality outputs ready for real-world use.

Post-processing bridges the gap between AI creativity and user satisfaction, making the outputs versatile and practical.

Step 5: User Feedback and Continuous Learning

The last step involves collecting user feedback to improve the system. Users rate, edit, or select images, and this data is fed back into the AI platform. Continuous learning helps models adapt to user preferences and reduce biases inherited from training data.

Leading platforms involve over a million users in these feedback loops, enabling rapid evolution and diversity in generated content. This ongoing process answers how does ai generate images that are ever more relevant and personalized.

User insights drive the next generation of AI art, ensuring that each new image is better than the last.

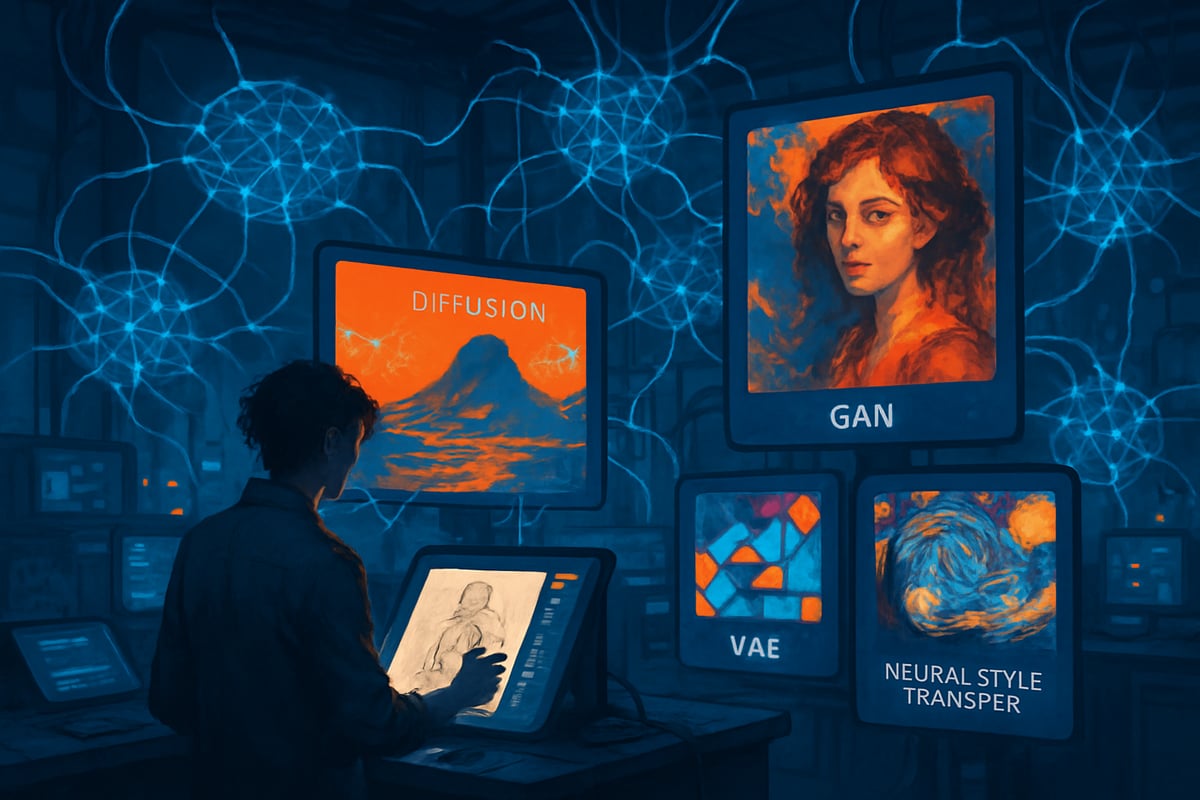

Core AI Image Generation Models and Techniques

Understanding the models at the heart of how does ai generate images is crucial for anyone interested in the technology’s progress. These models are the engines behind today’s most advanced image generators, each with unique strengths, limitations, and applications. Let’s explore the foundational techniques shaping AI art in 2026.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks are central to how does ai generate images. GANs use a two-part system: a generator that creates images and a discriminator that judges them. These networks train together, constantly improving as the generator tries to fool the discriminator.

Over the years, GANs have evolved from basic implementations to sophisticated versions like StyleGAN and BigGAN. These advanced models can create hyper-realistic portraits, fashion designs, and even unique artistic compositions. For example, GANs are responsible for producing synthetic faces so convincing they are nearly indistinguishable from real photos.

The impact of GANs is clear in industries such as entertainment and media. Their ability to create high-resolution visuals, often up to 1024x1024 pixels, has revolutionized digital art and design. However, GANs can sometimes produce artifacts or struggle with very complex scenes. Despite these challenges, their creative power makes them a key component in how does ai generate images.

Diffusion Models

Diffusion models have rapidly become the backbone of many advanced AI art tools, making them essential in understanding how does ai generate images. These models work by starting with random noise and gradually refining it into a coherent image. Each step removes a bit more noise, revealing details and structure.

The flexibility of diffusion models allows them to handle a wide range of styles and compositions. Popular platforms like DALL-E 3, Stable Diffusion, and Midjourney rely on this approach to turn abstract prompts into high-fidelity images. For instance, a user can describe a surreal landscape, and the model will iteratively build it from scratch.

Diffusion models stand out for their scalability and speed, often generating images in less than 10 seconds on modern hardware. Their ability to produce crisp, photorealistic outputs makes them a favorite among artists and businesses alike. This continued innovation is a driving force in how does ai generate images today.

Variational Autoencoders (VAEs)

Variational Autoencoders play a unique role in how does ai generate images, focusing on controlled creativity and exploration. VAEs use an encoder-decoder structure to compress images into a compact, numerical form called latent space. From this space, they can sample and reconstruct new images.

One of the strengths of VAEs is their ability to blend styles and morph concepts. Designers often use VAEs to create multiple logo variants or explore subtle changes in visual themes. This makes VAEs valuable for tasks that require creative iteration and fine-tuning.

While VAEs typically produce images with less sharpness compared to GANs and Diffusion Models, their smooth latent space is perfect for controlled exploration. They are especially useful in research and early-stage design, providing insights into how does ai generate images with precision and flexibility.

Neural Style Transfer (NST)

Neural Style Transfer brings a different flavor to how does ai generate images by merging the content of one image with the style of another. Using convolutional neural networks, NST extracts features from both source and style images, then recombines them to create something new.

Artists and designers use NST to transform photos into paintings or blend multiple artistic styles. For example, applying Van Gogh’s brushwork to a modern cityscape results in a visually stunning hybrid. NST models are fast, often processing images in real time, which enables instant previews and rapid experimentation.

The real strength of NST lies in its ability to democratize creativity. Anyone can turn a snapshot into a masterpiece with just a few clicks, showcasing how does ai generate images that are both personal and visually compelling.

Model Type | Strengths | Limitations | Real-World Use |

|---|---|---|---|

GANs | High realism, detail | Artifacts, complex scenes | Portraits, fashion, art synthesis |

Diffusion | Flexibility, speed | Resource-intensive | Landscapes, abstract art |

VAEs | Style blending, control | Lower sharpness | Logos, concept exploration |

NST | Real-time style mixing | Limited to style transfer | Artistic filters, photo painting |

The growth of these models is fueling a market boom. According to the AI Image Generator Market Growth Analysis, the adoption of generative AI techniques is transforming industries worldwide. Understanding how does ai generate images through these core models is foundational for anyone aiming to leverage AI’s creative potential.

Real-World Applications and Use Cases

Artificial intelligence is transforming how we create, share, and experience images. When asking how does ai generate images, it is clear that the answer extends far beyond technical processes, as these tools now impact a wide range of industries and everyday experiences.

Creative Industries: Art, Design, and Marketing

In creative fields, how does ai generate images is redefining workflows for artists, designers, and marketers. Professionals use AI to produce original artwork, generate rapid prototypes, and create on-brand visuals for social media and advertising campaigns.

AI-generated logos tailored to brand identity

Marketing visuals that adapt to trends in real time

Tattoo designs created in seconds using platforms like AI Tattoo Art Generator

With AI, creative teams can iterate faster, explore new styles, and personalize content for different audiences.

Entertainment and Media

Entertainment studios are leveraging how does ai generate images to streamline concept art, character design, and animation. Game developers use generative models to build immersive worlds, while filmmakers rely on AI for storyboarding and visual effects.

Movie concept art generated from simple prompts

Game environments created with photorealistic detail

Animation pipelines enhanced with AI-assisted rendering

These advances enable studios to bring complex visions to life with greater speed and flexibility.

E-commerce and Retail

In e-commerce, how does ai generate images helps retailers visualize products, create lifestyle imagery, and offer virtual try-ons. AI can generate multiple product variations and personalize recommendations based on customer preferences.

Product mockups for catalogs and online stores

Virtual fitting rooms for apparel and accessories

Lifestyle images tailored to target demographics

Retailers benefit from faster content creation and improved customer engagement.

Education and Research

Educators and researchers rely on how does ai generate images to communicate complex concepts visually. AI-generated diagrams, simulations, and synthetic datasets support interactive learning and scientific exploration.

Visual aids for STEM subjects and technical training

Synthetic data for developing and testing new AI models

Custom illustrations for textbooks and online courses

These tools make learning more accessible and research more efficient.

Accessibility and Personalization

AI image generation is opening new doors for accessibility and personalized experiences. Individuals with disabilities can create visual content using voice or text, while anyone can generate unique art or gifts tailored to their interests.

Tools for converting descriptions into images

Personalized art for home decor or gifting

Adaptive interfaces that enhance creative expression

As AI adoption grows, its impact on creativity, commerce, and communication continues to expand. For deeper insights into industry trends and adoption rates, review the latest AI Image Generator Market Statistics 2024, which details growth across sectors.

DaVinci AI: Empowering Users with Advanced AI Art Generation

Imagine having a creative assistant that understands your vision and brings it to life in seconds. DaVinci AI stands at the forefront of platforms answering the question, how does ai generate images, by harnessing the latest generative models to make advanced image creation accessible to everyone.

DaVinci AI offers a rich suite of tools designed for creativity and efficiency:

AI Art Generator for unique artworks from simple prompts

Tattoo Generator for custom tattoo concepts

Logo Generator and Signature Generator for branding and personalization

Additional tools for editing, style exploration, and creative history

The platform simplifies the process of how does ai generate images, letting users choose from curated styles, input prompts, and receive instant, high-quality results. Whether you are on the web or using iOS or Android, DaVinci AI ensures creativity is always within reach.

Professionals and hobbyists alike use DaVinci AI for presentations, social media campaigns, marketing visuals, tattoo inspiration, and personal projects. With features like the Style Explorer, Prompt Library, and advanced editing tools, users can experiment and refine their creations with ease.

Trusted by over one million users, DaVinci AI is built for both individuals and businesses seeking commercial-grade art generation. The platform’s user-friendly design and robust capabilities reflect the rapid adoption and market growth seen across the industry, as highlighted in recent AI Image Generator Market Size and Forecast reports.

DaVinci AI empowers you to experience firsthand how does ai generate images, transforming ideas into professional visuals with just a prompt.

The Future of AI Image Generation: Trends and Innovations for 2026

Artificial intelligence is rapidly reshaping how we create and experience visuals. In 2026, the answer to "how does ai generate images" is evolving beyond simple text-to-image conversion. Today’s innovations are opening new creative possibilities, making AI image generation more interactive, multimodal, and accessible than ever.

Multimodal AI: Beyond Text-to-Image

In 2026, multimodal AI models are at the forefront of innovation. These systems can process and combine various inputs, such as text, images, sketches, and even audio descriptions. This enables users to guide image creation in ways that closely match their intent.

For example, a designer might describe a scene with their voice, upload a rough sketch, and type a prompt, allowing the AI to blend all cues. This evolution in how does ai generate images creates more nuanced and expressive results, bridging the gap between imagination and digital art.

Real-Time and Interactive Generation

Speed and interactivity are now critical. AI platforms can generate images in real time, allowing instant feedback and on-the-fly adjustments during the creative process. Artists and marketers can tweak prompts, adjust styles, or make changes collaboratively with AI in a live session.

This shift in how does ai generate images empowers users to iterate quickly and experiment with different ideas, fostering a more dynamic creative workflow. It also supports collaborative work, where multiple contributors refine images together.

Improved Customization and Control

Users now have unprecedented control over their AI-generated content. Advanced platforms allow fine-tuning of image attributes, such as style, mood, and composition, through adjustable parameters and sliders. Some systems even let users train personalized models based on their unique preferences or brand guidelines.

This advancement in how does ai generate images means outputs are more tailored and relevant. Whether for business branding or personal art, customization ensures that the generated visuals align closely with the user’s vision.

Ethical Considerations and Responsible AI

As AI image generation becomes mainstream, ethical concerns are increasingly important. Addressing bias in training data, ensuring copyright compliance, and providing transparency about how images are produced are key priorities.

Understanding how does ai generate images also involves evaluating the sources of training data and implementing safeguards against misuse. Platforms are adopting clearer documentation, provenance tracking, and user controls to build trust and encourage responsible use.

Open Source and Community-Driven Innovation

The rise of open-source AI models and collaborative datasets is democratizing access to image generation tools. Developers and artists worldwide contribute to shared projects, accelerating innovation and making advanced capabilities available to all skill levels.

This community-driven approach is transforming how does ai generate images by fostering experimentation and knowledge sharing. Open platforms invite feedback, leading to more robust, diverse, and creative AI systems.

Statistics and Market Growth

The AI image generation market is experiencing rapid expansion. According to the AI Image Generator Market Report 2025, the sector is projected to grow by more than 30 percent annually through 2025. This growth is driven by adoption in fields like marketing, gaming, education, and design.

Year | Projected Market Size | Annual Growth Rate |

|---|---|---|

2023 | $1.2 Billion | 28% |

2025 | $2.1 Billion | 33% |

As more organizations embrace AI for visual content, the demand for innovative and reliable tools will continue to rise.

Challenges and Opportunities Ahead

Despite remarkable progress, several challenges remain:

Scaling models to ultra-high resolutions without artifacts

Reducing bias and improving interpretability

Ensuring ethical and transparent outputs

At the same time, there are immense opportunities. AI is becoming a true creative partner, helping users break through artistic barriers and reimagine what’s possible. As the technology matures, the answer to how does ai generate images will only become more exciting, collaborative, and human-centric.